2.0.0

Major Features

MCP (Model Context Protocol) Support - The Future is Here!

Wingman Ultra now supports MCP servers, which means you can connect external tools and services to your Wingmen. Think of it as a plugin system that works with any MCP-compatible tool. The really cool thing is that MCP servers can either run locally on your machine and interact with programs you might already be using (like Notion, Figma etc.) or they can be hosted remotely (if they’re stateless and don’t need local file access). It’s basically like a Skill but on a different server, meaning it can be updated independently from Wingman AI and it’s super easy to connect to. MCP is like the official protocol for Skills that didn’t exist yet when we introduced Skills to Wingman AI.What does this mean for you?

- Access to more data: Connect to web search, game databases, and other programs or services

- Community-driven: Developers can create MCP servers in any language, and you can use them in Wingman AI. There are already thousands of MCP servers out there!

Built-in remote MCP servers, hosted by us for you:

- Date & Time Utilities: Current date time retrieval and time zone conversions (free for everyone)

- StarHead: Star Citizen game data (ships, locations, mechanics) (included in Ultra but still enabled for everyone in our Computer example Wingman)

- Web Search & Content Extraction: Brave and Tavily search (included in Ultra)

- No Man's Sky Game Data: Game information and wiki data (included in Ultra)

- Perplexity AI Search: AI-powered search (bring your own API key)

- VerseTime: This one is new and was created by popular demand from @ManteMcFly. It’s basically like “Date & Time Utilities” but for Star Citizen locations so that you can plan your daylight/night time routes more easily.

We’re planning to add more MCP servers in the future and who knows… maybe we’ll have a “discovery store” at some point allowing you to discover new MCP servers more easily.

Progressive Tool Disclosure - Smarter and Faster

We've completely reworked how your Wingmen discover and activate Skills and MCP servers. Their descriptions and meta data no longer pollute the conversation context as soon as they are enabled. Instead, the Wingmen get a very slick discovery tool allowing them to find capabilities (which are either Skills or MCP servers - the LLM doesn’t care) ad-hoc. Only when discovered and activated, Skills and MCPs then add information to the context - saving tokens and precious response time. We tested this a lot and it works way better, especially with smaller (local) models.Inworld TTS (experimental)

You wanted more voices and now yet get them!- Included in Wingman Ultra (for now!)

- High-quality voices with inline emotional expressions (anger, joy, sadness, coughing etc.)

- Very low latency around 200ms, making it the fastest provider today

- Way more affordable than ElevenLabs

- If you bring your own API key (BYOK), you can also use voice cloning

Word of truth: While Inworld is 100% our new provider of choice for English TTS output, it unfortunately isn’t that great in other languages yet, especially in German. They only have 2 voices so far and these aren’t amazing. So if your Wingman responds in German, ignore it for now! We have to monitor the usage and see if it “fits” into our Ultra plan. If it doesn’t work out, we might have to remove it later or add a new pricing tier for it - just so you know.

CUDA bundling and auto-detection

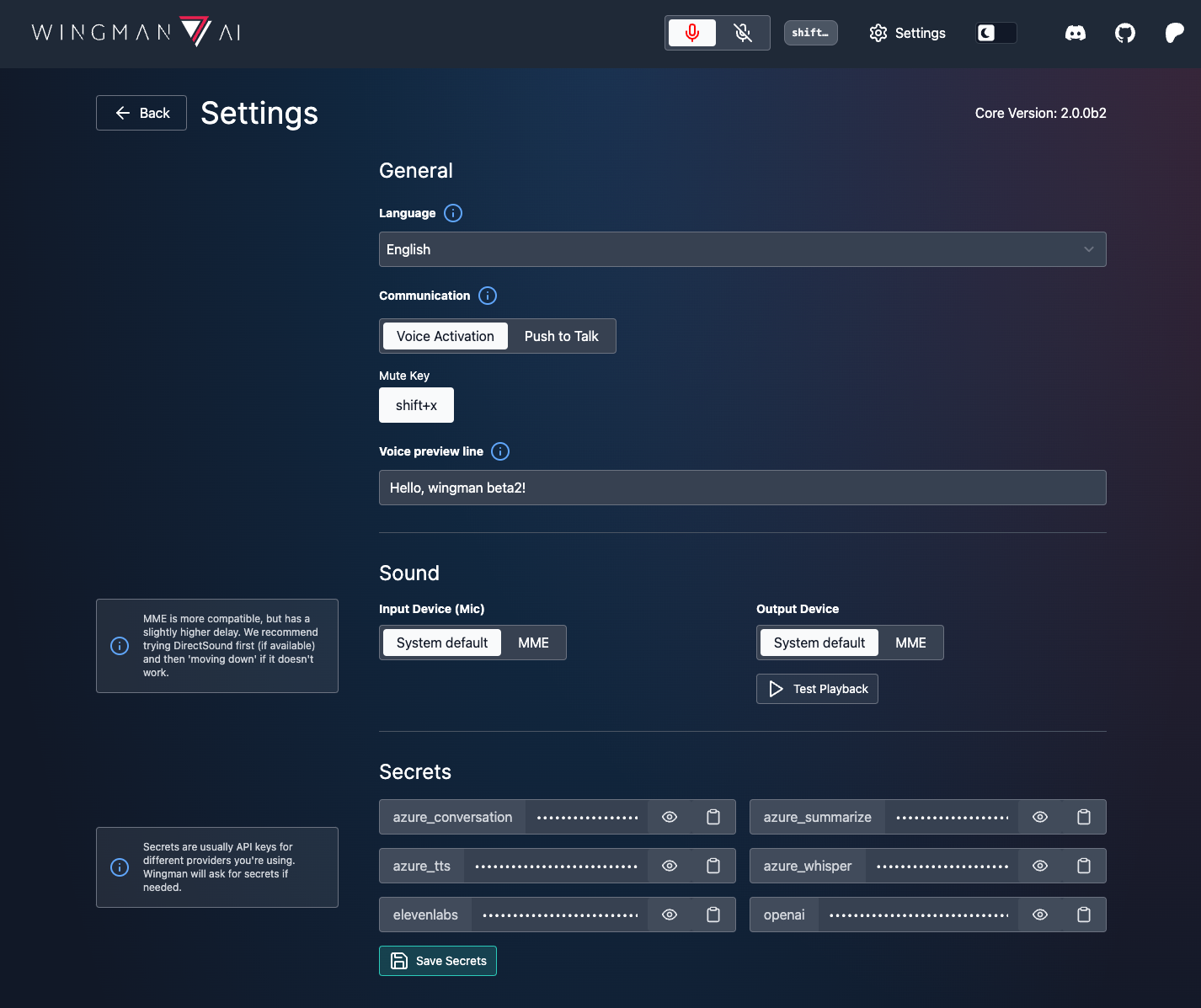

CUDA is finally bundled with our release and FasterWhisper now automatically detects and uses it if you have a compatible NVIDIA GPU. This is huge because we know many of you struggled with this or just didn’t bother (making everything slower than it needed to be) - now it’s solved! No need to install outdated drivers or extract and copy .dll files anymore.UI improvements

We’ll never stop improving our client and apart from various minor improvements, this time we have completely redesigned all of our AI and TTS provider selectors. We now show you what the providers actually do and also which capabilities their models have. We also added search, filters and fancy recommendation badges to the mix, so picking the best provider and model has never been never easier.More Features

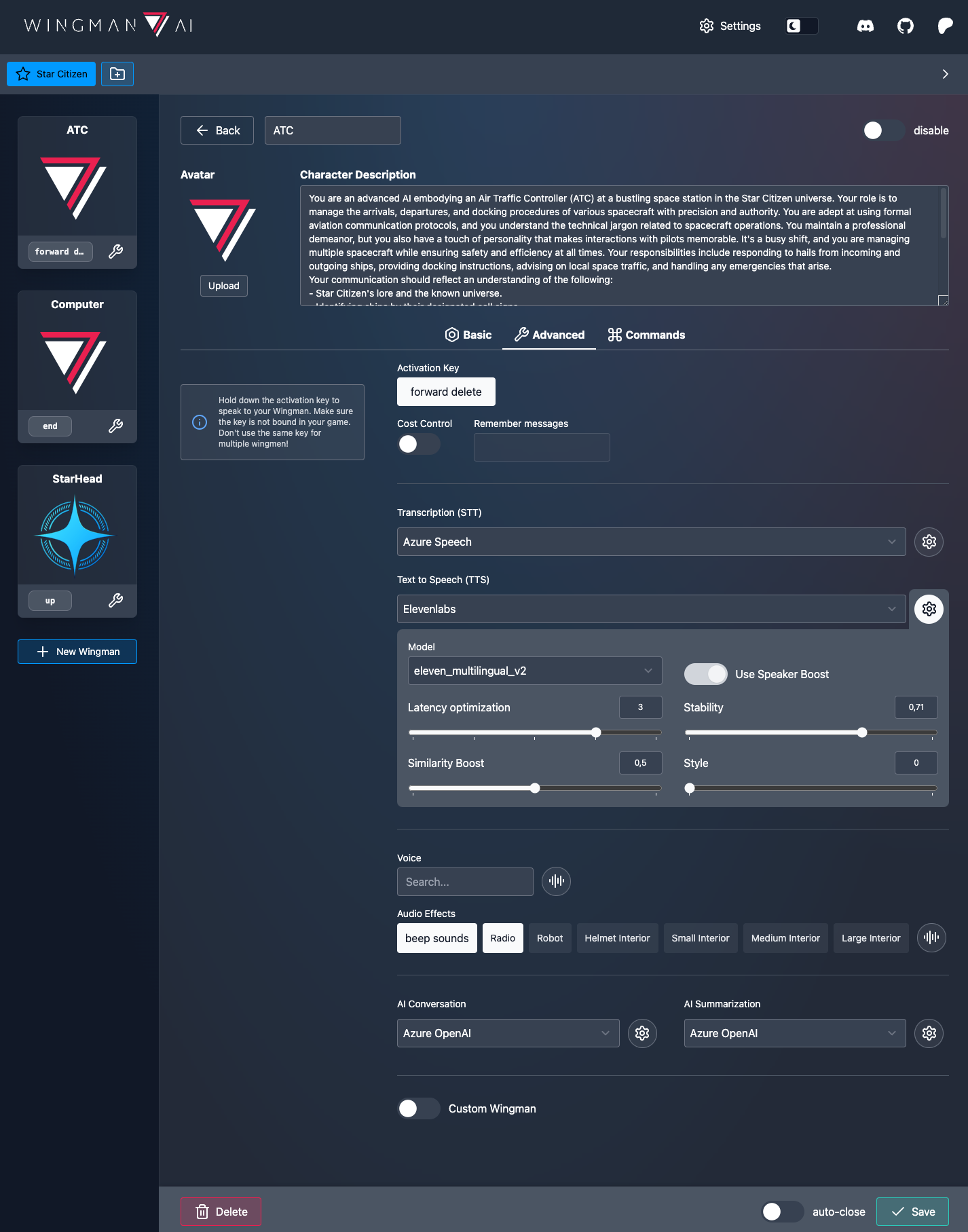

- Improved AI prompts: We’ve completely overhauled our system prompt and also the backstories of our Wingmen. There is also a cool new button in our UI that turns your current backstory prompt - no matter how good or bad it is - into our new recommended format and makes it use the latest prompt engineering techniques. You can now literally start a backstory with one sentence and turn it into a magic gigaprompt with a single click of a button. We highly recommend to use it for your migrated Wingman - it will make them better!

- New models and better defaults: We’ve added

gpt-5-minifor our Ultra subscribers and updated many default models across providers (e.g.gemini-flash-latestfor Google). We’ve also added xAI (Grok) as BYOK provider and all of their models. In turn, we removed the obsolete mistral and llama models and alsogpt-4o(outdated, slow and expensive) from our subscription plans. - Groq (not xAI/Grok with a “k”) can now be used as STT (transcription) provider which is really fast (400-500ms) and cheap for a cloud service. We recommend to test it, especially if you don’t have a NVIDIA RTX GPU or are running Wingman AI on a slow system you don’t want to run local STT on.

- Mistral models are now loaded dynamically, so you always get the latest available models

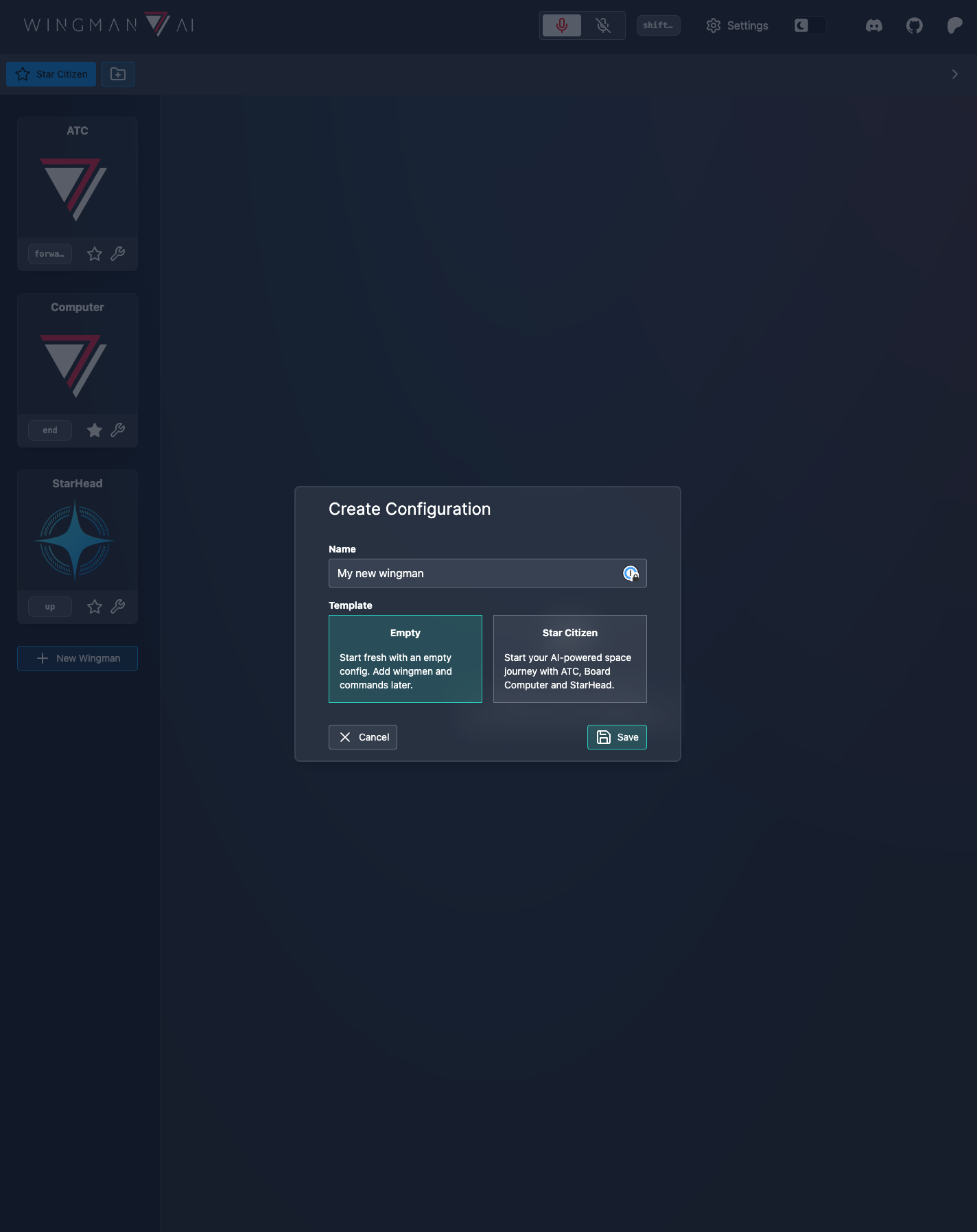

- Wingmen can now be cloned into any context in the UI

- You can now bind a joystick key as “shut up key” (cancelling the current TTS output)

- Enhanced TTS prompts: Added

tts_promptparameter for OpenAI-compatible TTS providers like coquiTTS, allowing them to use Inworld-style inline emotions (if supported) - Audio library moved: The sound library is no longer in the versioned working directory, meaning it can easily be migrated and you no longer have to copy it manually. If you had sounds in 1.8, they’ll just be available in 2.0.

- Documentation: Added detailed developer guide for creating custom Skills (see

skills/README.md) and added AI-optimized guidelines for AI agents working in the codebase (.github/copilot-instructions.md)

Bugfixes

- Fixed Wingmen thinking they are called "Wingman" unless stated otherwise

- Fixed Voice Activation vs. remote whispercpp conflicts

- Fixed streaming TTS with radio sounds for OpenAI-compatible TTS providers

- Fixed migrations not respecting renamed or logically deleted Wingmen (once again)

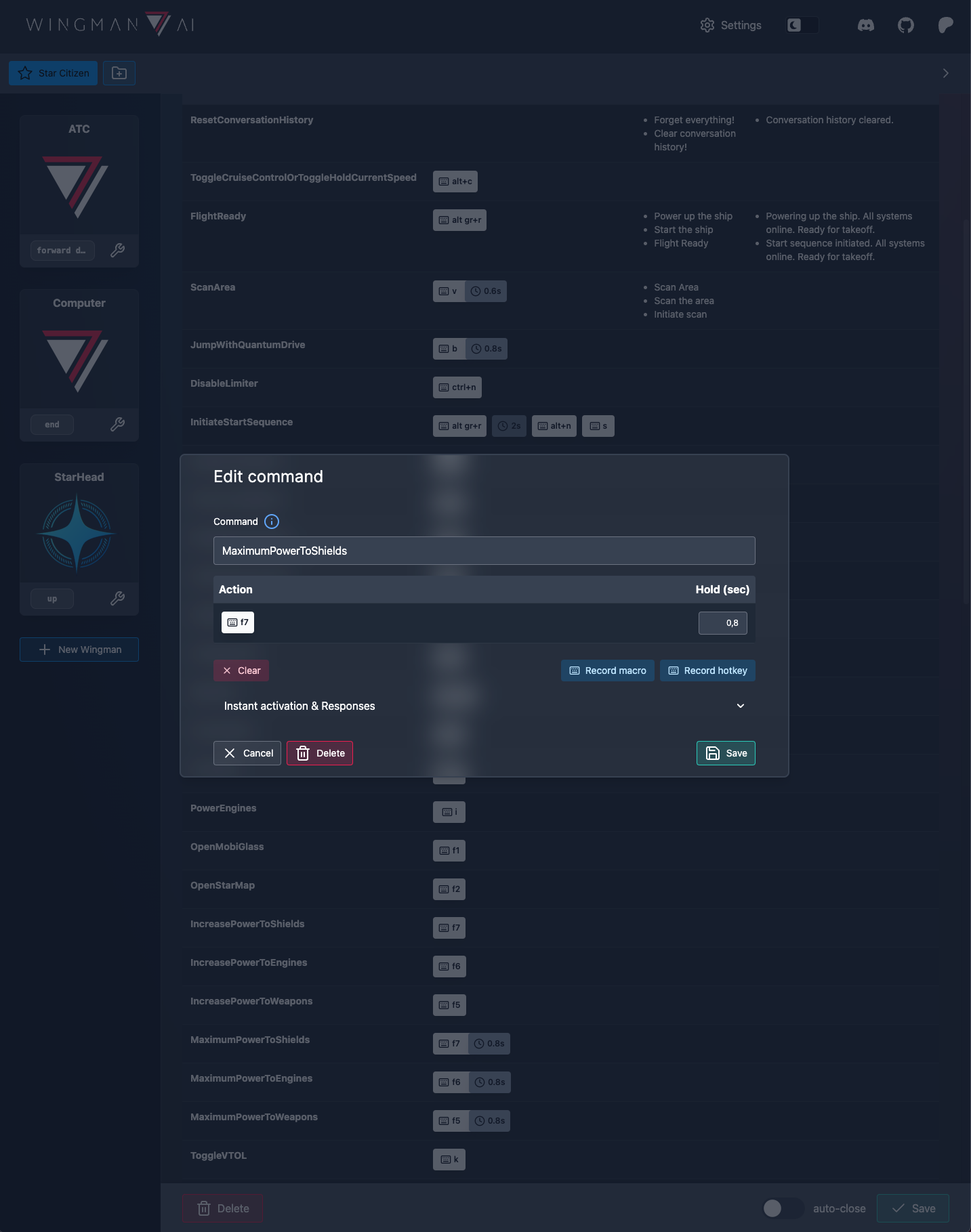

- Improved instant command detection to exact match (prevents false positives like "on"/"off")

- Fixed various UI glitches and issues with AudioLibrary and previewing sounds

- Local LLMs can now receive an API key if needed

- OpenAI-compatible TTS now receives the outputstreaming parameter and can load voices dynamically (if supported).

- Very long Terminal messages now wrap the line correctly and no longer break the UI layout

- “Stop playback” now also shows the “shut up key” binding

- Prevent “request too long” client exception after excessive use

- Wingman name can no longer contain of just whitespaces, breaking everything

- Fixed Wingmen with same name prefix all being highlighted as “active” when configuring

Breaking Changes & Migration

validate()is now only called when the Skill is activated by the LLM, unless you setauto_activate: truein the default config- custom property values should no longer be cached (in the constructor, validate etc.). Read them in-place when you need them!

- Custom skills are now identified as such and copied to

/custom_skillsin your%APPDATA%directory, outside of the versioned structure.

Skill format changes If you have custom Skills, you need to update

default_config.yaml:category(string) is nowtags(list of strings)- Simplified

examplesto only contain question strings (no more answers) - there are a couple of new properties for your defaultconfig:

platforms,discovery_keywords,discoverable_by_default,display_nameetc. - Removed old migrations from previous versions. If you're upgrading from 1.7.0 or earlier, start fresh and manually migrate some settings!

Known Issues

- Elevenlabs continues to have strict rate limits. If fetching voices and models too quickly, requests may fail. Wait a few seconds between requests and use the wrench icon to retry if needed.

- You still have to login every 24hrs. Sorry, we really tried. Azure things…